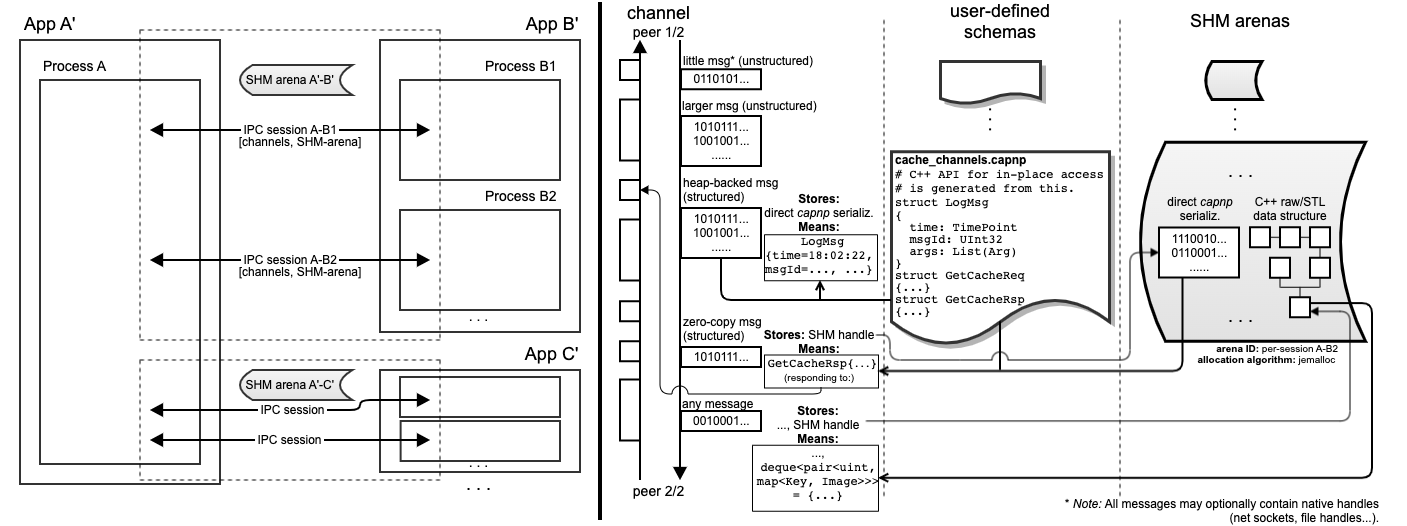

- On the left – Flow-IPC's mission: applications speaking to each other performantly, in organized fashion. The infrastructure inside the dotted-line boxes is provided by Flow-IPC. Perhaps most centrally this offers communication channels over which one can transmit messages with a few lines of code.

- On the right – zooming into a single channel; and how it transmits data of various kinds, especially without copying (therefore at high speed).

- "capnp" stands for Cap'n Proto.

- Some readers have said this diagram is too "busy." If that's your impression: Please do not worry about the details. It is a survey of what and how one could transmit via Flow-IPC; different details might appeal to different users.

- Ultimately, if you've got a message or data structure to share between processes, Flow-IPC will let you do it with a few lines of code.

We hope a picture is worth a thousand words, but please do scroll down to read a few words anyway.

If you'd prefer a synopsis with code snippets, right away, try API Overview / Synopses.

Executive summary: Why does Flow-IPC exist?

Multi-process microservice systems need to communicate between processes efficiently. Existing microservice communication frameworks are elegant at a high level but can add unacceptable latency out of the box. (This is true of even lower-level tools including the popular and powerful gRPC, usually written around Protocol Buffers serialization.) Low-level interprocess communication (IPC) solutions, typically custom-written on-demand to address this problem, struggle to do so comprehensively and in reusable fashion. Teams repeatedly spend resources on challenges like structured data and session cleanup. These issues make it difficult to break monolithic systems into more resilient multi-process systems that are also maximally performant.

Flow-IPC is a modern C++ library that solves these problems. It adds virtually zero latency. Structured data are represented using the high-speed Cap’n Proto (capnp) serialization library, which is integrated directly into our shared memory (SHM) system. The Flow-IPC SHM system extends a commercial-grade memory manager (jemalloc, as used by FreeBSD and Meta). Overall, this approach eliminates all memory copying (end-to-end zero copy).

Flow-IPC features a session-based channel management model. A session is a conversation between two programs; to start talking one only needs the name of the other program. Resource cleanup, in case of exit or failure of either program, is automatic. Flow-IPC’s sessions are also safety-minded as to the identities and permissions at both ends of the conversation.

Flow-IPC’s API allows developers to easily adapt existing code to a multi-process model. Instead of each dev team writing their own IPC implementation piecemeal, Flow-IPC provides a highly efficient standard that can be used across many projects.

Welcome to the guided Manual. It explains how to use Flow-IPC with a gentle learning curve in mind. It is arranged in top-down order. (You may also be interested in the Reference.)

Feature overview: What is Flow-IPC?

Flow-IPC:

- is a modern C++ library with a concept-based API in the spirit of STL/Boost;

- enables near-zero-latency zero-copy messaging between processes (via behind-the-scenes use of the below SHM solution);

- transmits messages containing binary data, native handles, and/or structured data (defined via Cap'n Proto);

- provides a shared memory (SHM) solution

- with out-of-the-box ability to transmit arbitrarily complex combinations of scalars,

structs, and STL-compliant containers thereof; - that integrates with commercial-grade memory managers (a/k/a

malloc()providers).- In particular we integrate with jemalloc, a thread-caching memory manager at the core of FreeBSD, Meta, and others.

- with out-of-the-box ability to transmit arbitrarily complex combinations of scalars,

A key feature of Flow-IPC is pain-free setup of process-to-process conversations (sessions), so that users need not worry about coordinating individual shared-resource naming between processes, not to mention kernel-persistent resource cleanup.

Flow-IPC provides 2 ways to integrate with your applications' event loops. These can be intermixed.

- The async-I/O API automatically starts threads as needed to offload work onto multi-processor cores.

- The

sync_ioAPI supplies lighter-weight objects allowing you full control over each application's thread structure, hooking into reactor-style (poll(),epoll_wait(), etc.) or proactor (boost.asio) event loops. As a result context switching is minimized.

Lastly Flow-IPC supplies lower-level utilities facilitating work with POSIX and SHM-based message queues (MQs) and local (Unix domain) stream sockets.

Delving deeper

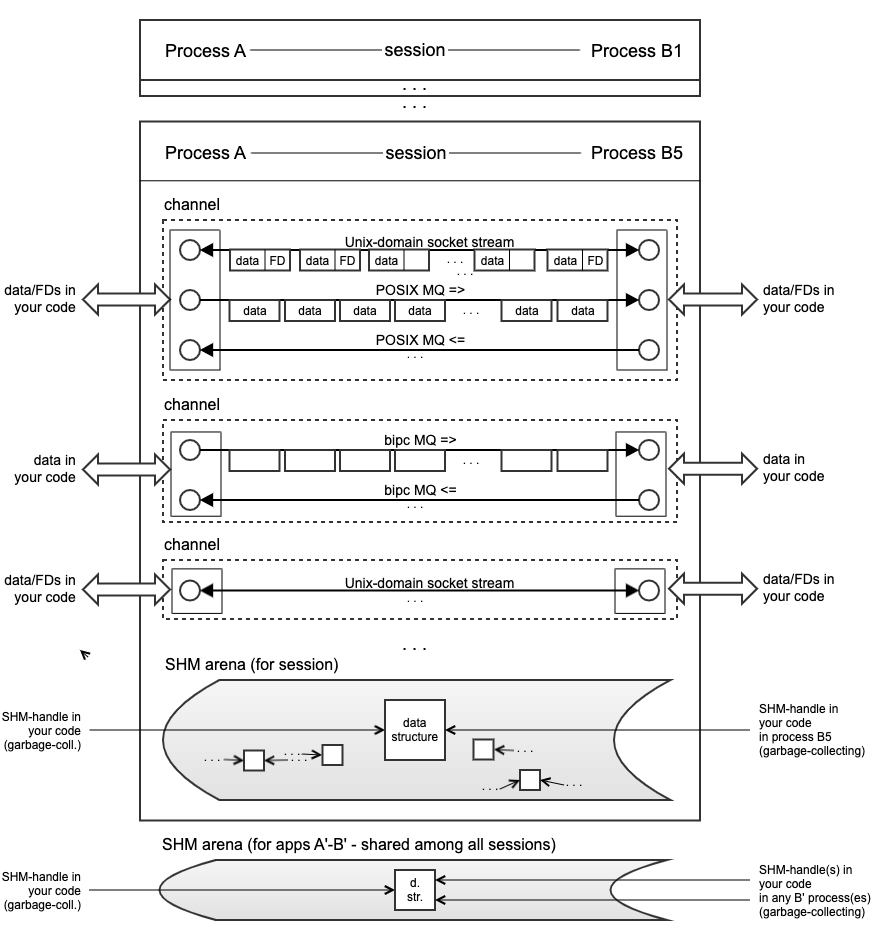

The high-level diagram above is a pretty good synopsis of the highest-impact features. The following diagram delves deeper, roughly introducing the core layer of ipc::transport. Then we begin a textual exploration in API Overview / Synopses.

Future directions of work

We feel this is a comprehensive work, but there is always more to achieve. Beyond maintenance and incremental features, here are some future-work ideas of a more strategic nature.

- Networked IPC: At the moment all IPC supported by Flow-IPC is between processes within a given machine (node). A session can only be established that way for now. Extending this to establish IPC sessions via network would be easy. Unix-domain-socket-based low-level transports would easily be extended to work via TCP sockets (at least). This is a very natural next step for Flow-IPC development: a low-hanging fruit.

- Networked "shared memory" (RDMA): While the preceding bullet point would have clear utility, naturally the zero-copy aspect of the existing Flow-IPC cannot directly translate across a networked session: It is internally achieved using SHM, but there is no shared memory between two separate machines. There is, however, Remote Direct Memory Access (RDMA): direct memory access from the memory of one computer into that of another without involving either one's OS. While assuredly non-trivial, leveraging RDMA in Flow-IPC might allow for a major improvement over the feature in the preceding bullet point, analogously to how SHM-based zero-copy hugely improves upon basic IPC.

- Beyond C++: This is a C++ project at this time, but languages including Rust and Go have gained well-deserved popularity as well. In a similar way that (for example) Cap'n Proto's original core is in C++, but there are implementations for other languages, it would make sense for the same to happen for Flow-IPC. There are no technical stumbling blocks for this; it is only a question of time and effort.

- More architectures: As of this writing, Flow-IPC targets Linux + x86-64. MacOS/Darwin/FreeBSD support is attainable with a few weeks of work, we estimate. (Could tackle Windows as well.) Supporting other hardware architectures, such as ARM64, is also doable and valuable. We'd like to do these things: by far most of the code is platform-independent, the exceptions being certain internal low-level details typically involving shared memory and pointer tagging in the SHM-jemalloc sub-component.

We welcome feedback, ideas, and (of course) pull requests of all kinds!

Onward!